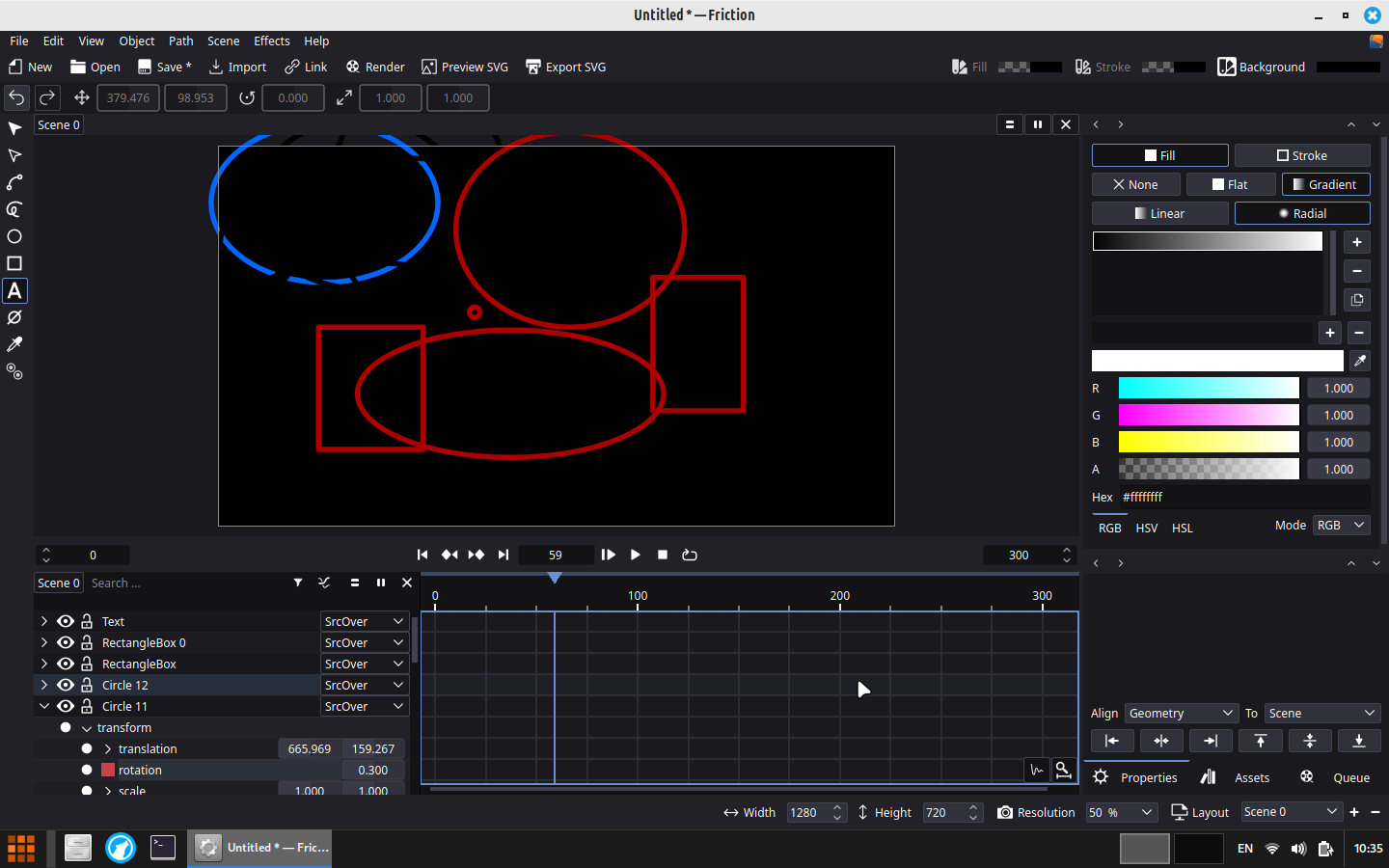

So I wanted to test the speed difference between ng_bridge(4) and ng_eiface(4) vs bridge(4) and epair(4) on real hardware, so I used my Fujitsu Q556 with FreeBSD 14.3-RELEASE and two Bastille VNET jails. It uses the Intel Core i5 6400T (4x 2.2 GHz) and 8 GiB of RAM, and a 1 Gbit/s Realtek NIC (re0).

The results with 10s of iperf3:

- Host to Jail (ng_bridge + ng_eiface): ~6.0 Gbit/s

- Jail to Jail: (ng_bridge + ng_eiface): ~5.5 Gbit/s

- Host to Jail (bridge + epair): ~5.5 Gbit/s

- Jail to Jail: (bridge + epair): ~5.0 Gbit/s

The CPU usage with Netgraph was around 100%, whereas with bridge + epair, one thread had a load of 100%, while the other three threads had a load of around 10-25%.

I've also noticed that the iperf3 test between the two Netgraph Jails had 5-6k retries per second, so something's going on here.

I have read that Netgraph has some locking problems, which probably explains the high CPU load. Therefore, it isn't a good choice for high-traffic environments (at the moment?).

The improvements made to bridge(4) on FreeBSD, as well as those yet to come with FreeBSD 15, coupled with the higher complexity of Netgraph, are somewhat curbing my enthusiasm right now.

Still, using Netgraph for networking jails and VMs on FreeBSD just 'feels' much cleaner, as it uses packet processing inside the kernel, compared to virtualising network interfaces. Ideally, I would like to get VLANs and LACP working with Netgraph so that I can compare the two approaches side by side with my "production" servers.

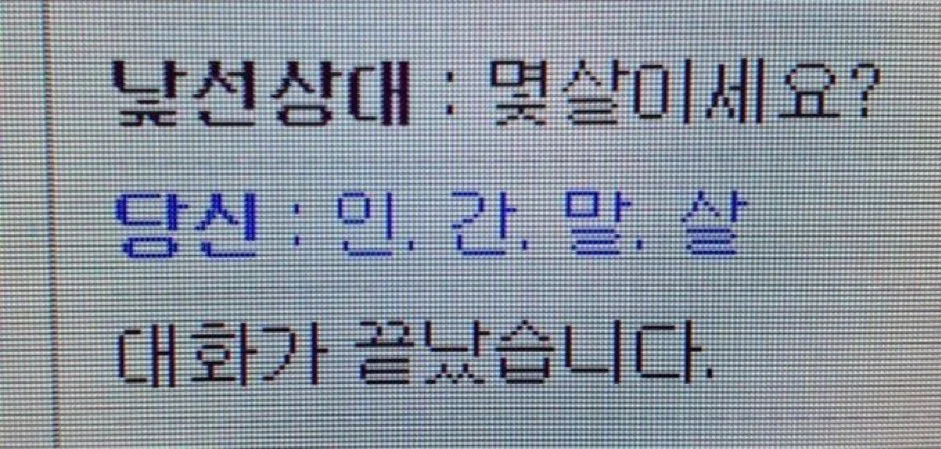

ニュース速報(総合)

ニュース速報(総合)

4/12

4/12  開始!!

開始!!

だゾ…

だゾ…

キジではない方の

キジではない方の

망뭉

망뭉

もふパラ4巻

もふパラ4巻

担当さんに送った

担当さんに送った