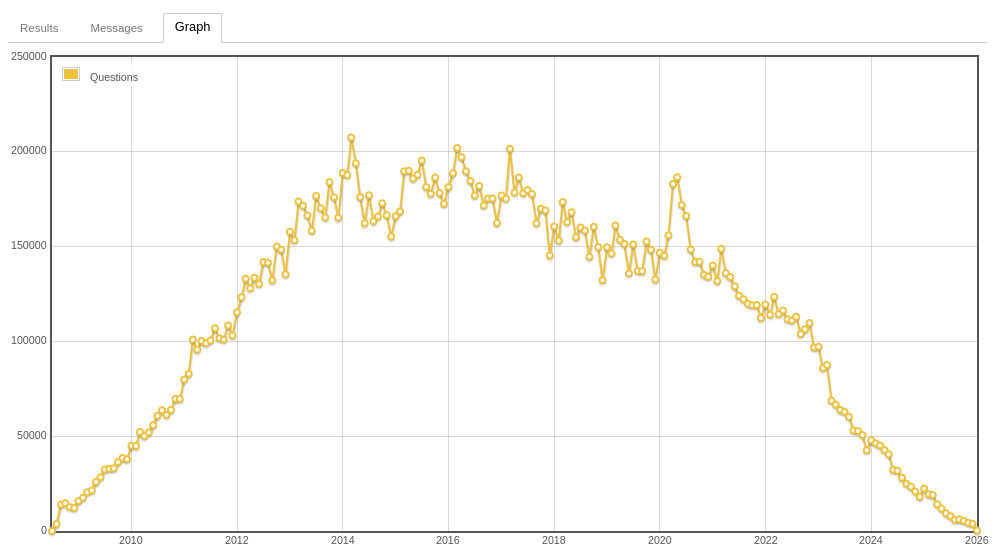

StackOverflow’s fall is a sign of something more troubling.

Sure, if your main metric is the round trip time it takes to solve a technical question then it may not look that bad.

After all, instead of asking questions to human experts who may take days or weeks to answer, beginners can directly submit them to an LLM trained on that very knowledge and get answers within seconds - perhaps not perfect, perhaps with a bit of refinement required amid model hallucinations, but in most of the cases enough to get them started.

What many seem to ignore though is that the training set that went into the LLM was harvested through tens of thousands of curated answers manually submitted by human experts over years to the StackExchange platforms.

We know a lot about all the most obscure use-cases of collections and itertools in Python, or the best composition patterns in SpringBoot, because thousands of human experts put together thoughtful answers and articles on those topics, and they are all publicly available.

But technology is never static. Programming languages and frameworks come and go. Where will the knowledge about such future craft live? In a world where humans no longer willingly and freely post such knowledge on StackOverflow or Reddit, how can such knowledge be fed to increasingly hallucinating stochastic parrots?

Or do we accept that human craft is only required to feed AI models that haven’t yet caught up with it, and then it can be nicely packaged and provided through chatbots owned by trillion-dollar companies?

As if the only added value of developing problem solving skills in science and engineering was just to feed that knowledge to our AI overlords, and then be tossed away like hollow carcasses afterwards?

그것

그것

웨이브 탑승 가능한가

웨이브 탑승 가능한가