Although everyone is into large models these days I like small models that you can understand.

I good example is the Bradley-Terry model of tournaments that I've mention before. If you have historical data on who beat whom in the past then you can build a model by assigning everyone a score s_i and say that the probability that i beats j is

f(s_i-s_j)

where f is the logistic function

f(x)=1/(1+exp(-x)).

The task is then to fit s_i to your historical data. This formula is almost the simplest thing you might make up using the toolkit of machine learning, but it turns out to be a maximum entropy model. (Weird how this happens more often than it should.)

The task of fitting the s_i has a long history and a popular method was developed by Zermelo (yes, that Zermelo) back in 1929.

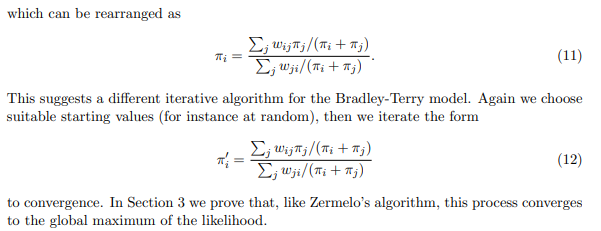

Anyway, I noticed a very recent paper that does a simple algebraic rearrangement of the underlying mathematics and results in a much faster algorithm to find the globally optimal fit.

https://jmlr.org/papers/volume24/22-1086/22-1086.pdf

Tangential: I'm amused by the fact that this model of game playing was developed by Milton (Terry) and (Ralph) Bradley but has nothing to do with Milton Bradley.