Back in December I got some Mellanox ConnectX-6 Dx, now I finally got around to playing with them. I got them because I was interested in two features:

- True integrated switch between all VM virtual functions and outside links, with communication between VMs, VLAN filtering rules, LACP-bonding the links and so on

- Hardware offloading for TLS-encryption

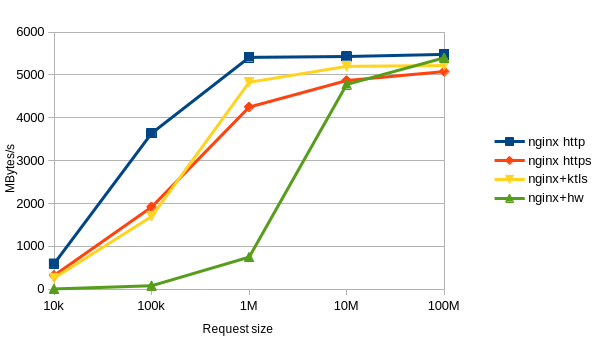

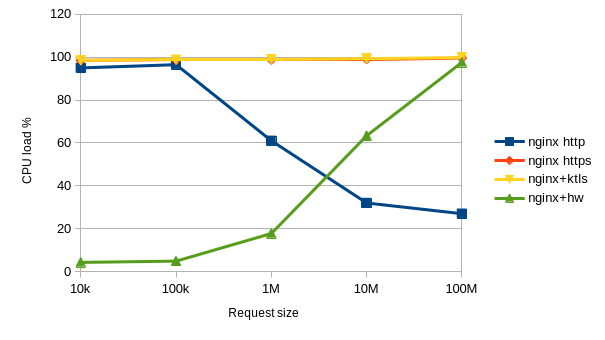

I now benchmarked the TLS-offload, and unfortunately I'm underwhelmed. A 🧵