Sorry for linking to Substack, but this one is so very good:

https://garymarcus.substack.com/p/a-knockout-blow-for-llms

A few excerpts:

Apple has a new paper; it’s pretty devastating to LLMs.

Whenever people ask me why I (contrary to widespread myth) actually like AI, and think that AI (though not GenAI) may ultimately be of great benefit to humanity, I invariably point to the advances in science and technology we might make if we could combine the causal reasoning abilities of our best scientists with the sheer compute power of modern digital computers.

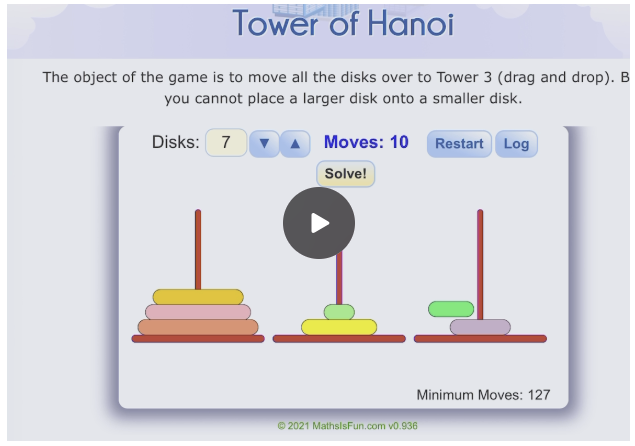

What the Apple paper shows, most fundamentally, regardless of how you define AGI, is that LLMs are no substitute for good well-specified conventional algorithms. (They also can’t play chess as well as conventional algorithms, can’t fold proteins like special-purpose neurosymbolic hybrids, can’t run databases as well as conventional databases, etc.)