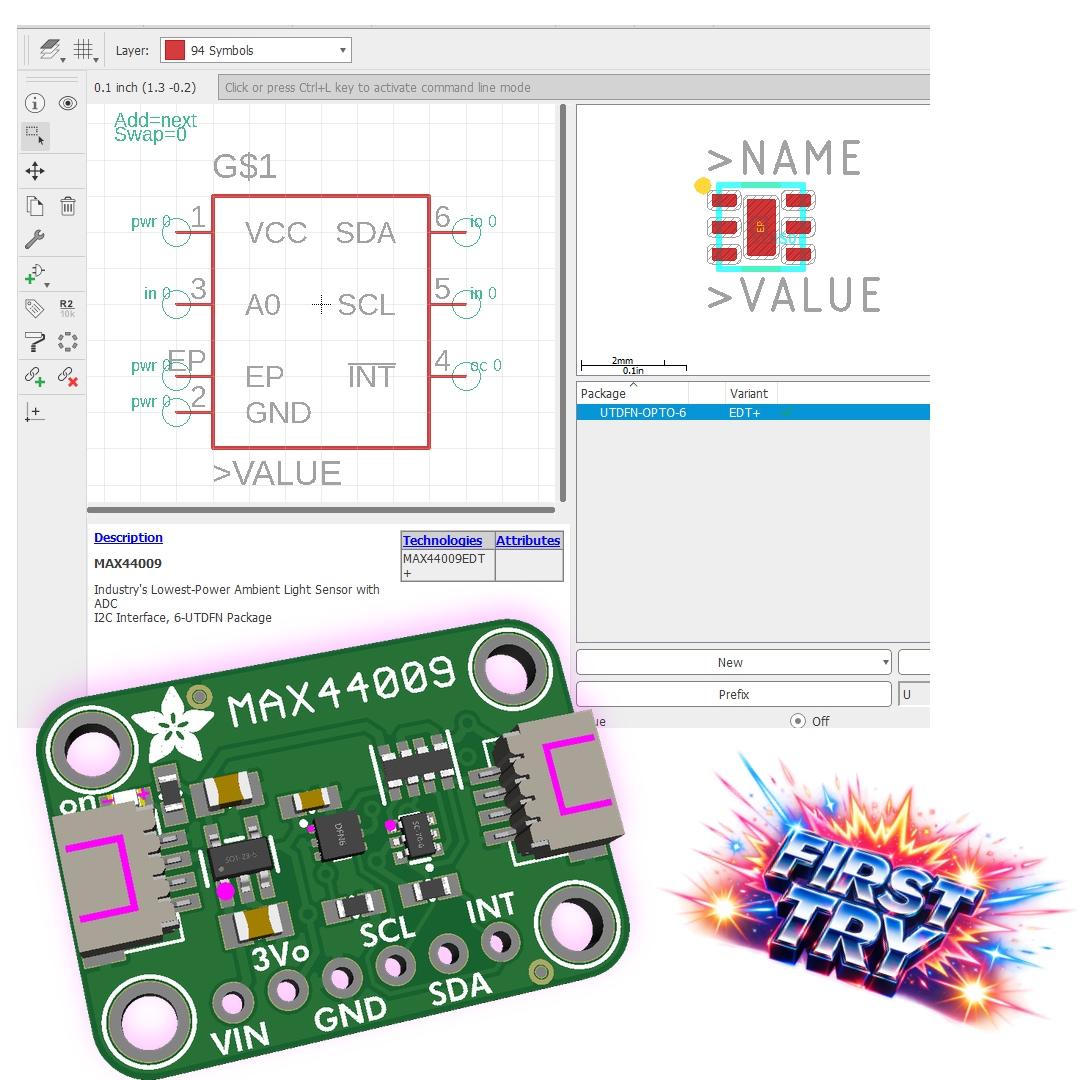

Here's Our First Gemini Deep Think LLM-Assisted Hardware Design

We've been using LLMs for software and firmware for years... now we're trying hardware. Threw a MAX44009 datasheet at Gemini Deep Think, asked for an EagleCAD library file, and about 10 minutes later it popped out working XML. Loaded it in Eagle, checked the pins and dimensions, rolled with it.