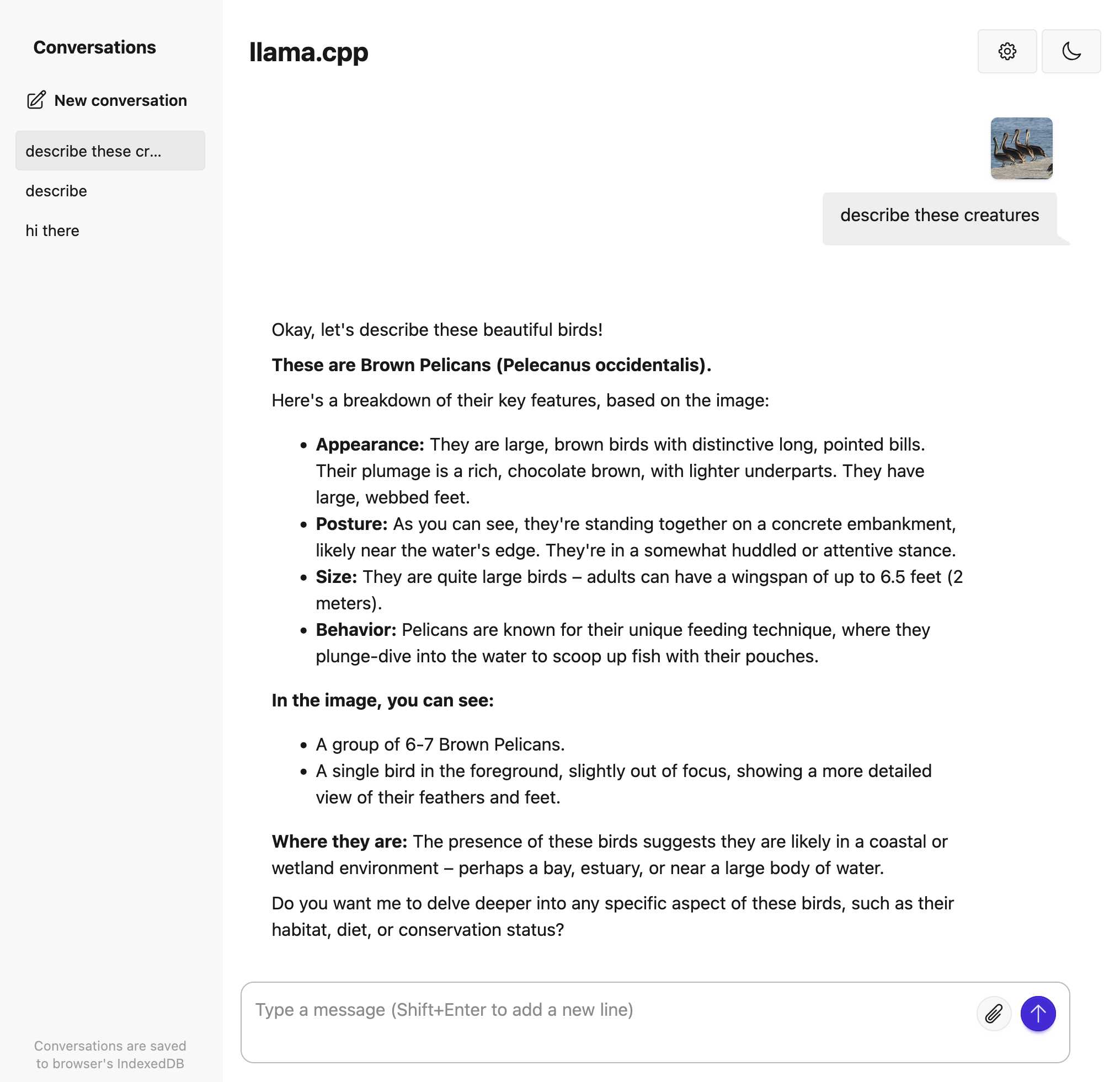

llama.cpp shipped new support for vision models this morning, including macOS binaries (albeit quarantined so you have to take extra steps to run them) that let you run vision models in a terminal or as a localhost web UI

My notes on how to get it running on a Mac: https://simonwillison.net/2025/May/10/llama-cpp-vision/