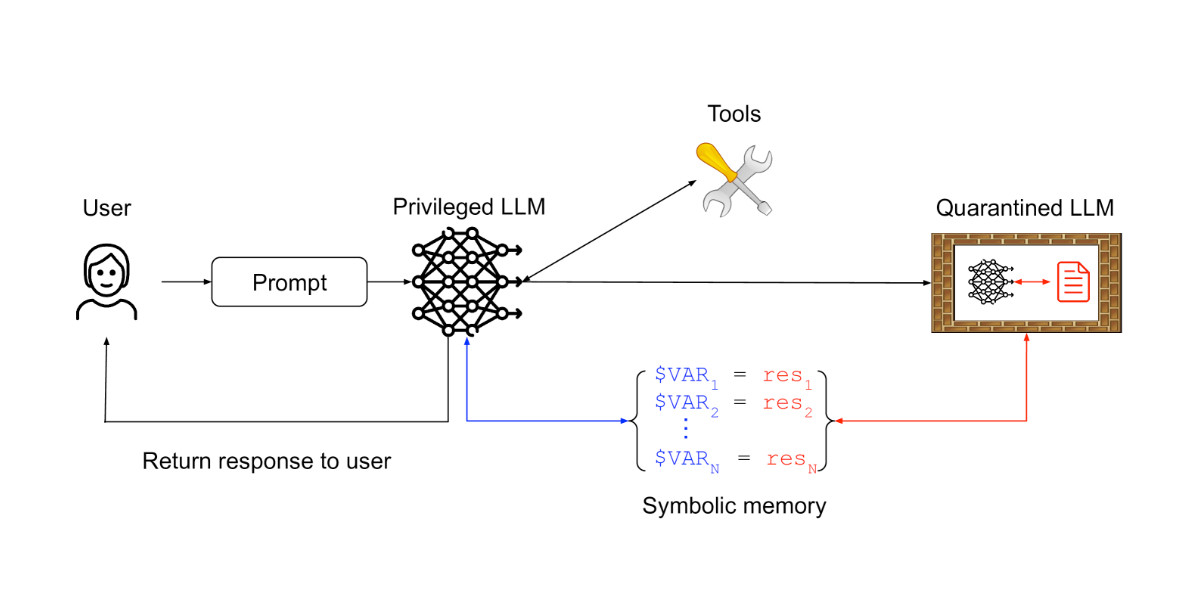

"Design Patterns for Securing LLM Agents against Prompt Injections" is an excellent new paper that provides six design patterns to help protect LLM tool-using systems (call them "agents" if you like) against prompt injection attacks

Here are my notes on the paper https://simonwillison.net/2025/Jun/13/prompt-injection-design-patterns/