The next-generation Xbox, codenamed "Project Helix," will reportedly deliver a 6x jump in rasterization and a massive 20x increase in ray tracing performance over the Series X. According to a new technical analysis from Moore’s Law is Dead, the console’s AMD Magnus APU will bridge the gap between console and PC gaming with a massive performance leap, but it will come with a significant catch: early estimates suggest a target price between $999 and $1,200 for its possible 2027 launch The Xbox Project Helix Will Deliver More Than 120 FPS Gameplay While the Magnus APU reportedly features only 30% more […]

🕊️

🕊️

!["Software. Faster. From planning to production, GitLab brings teams together to shorten cycle times, reduce costs, strengthen security, and increase developer productivity." Call to action: "Get free trial", or: "What is GitLab? [play icon]"](https://screaminginsi.de/fileserver/01V4VK17JW1FM02S07BRTV847X/attachment/original/01KK03F91D2T18DF6XE7VYB44R.png)

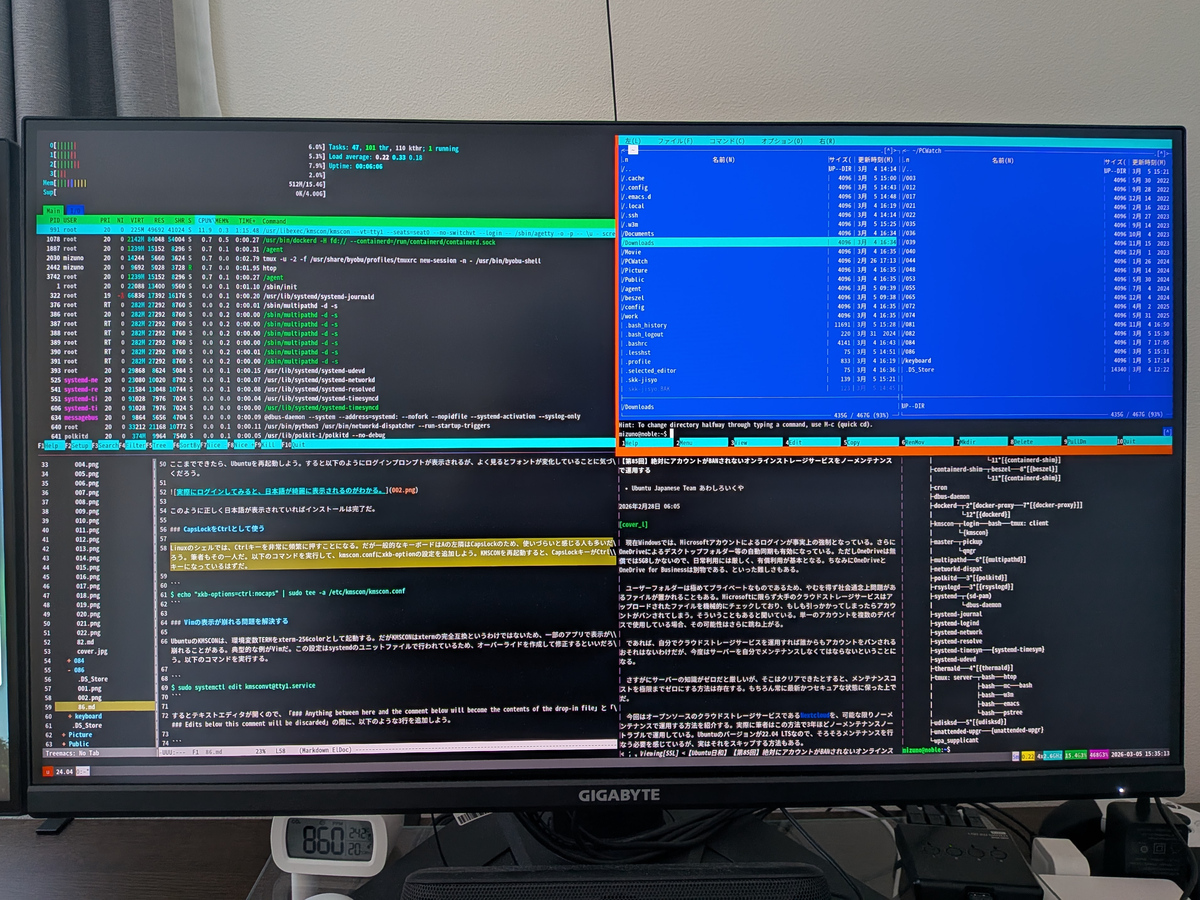

Zennのトレンド

Zennのトレンド

かちかちなまこ

かちかちなまこ