Coming soon in #Fedify 1.5.0: Smart fan-out for efficient activity delivery!

After getting feedback about our queue design, we're excited to introduce a significant improvement for accounts with large follower counts.

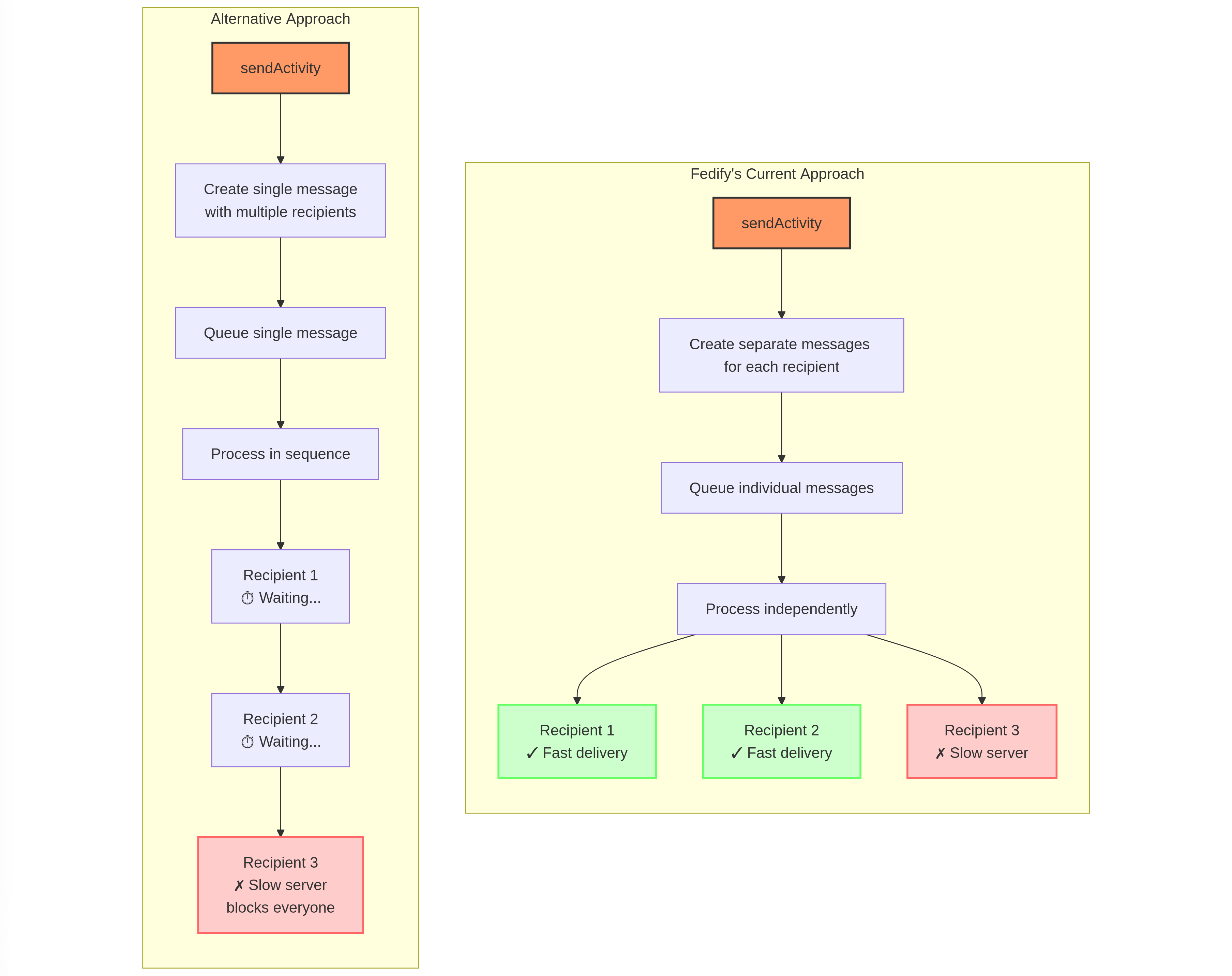

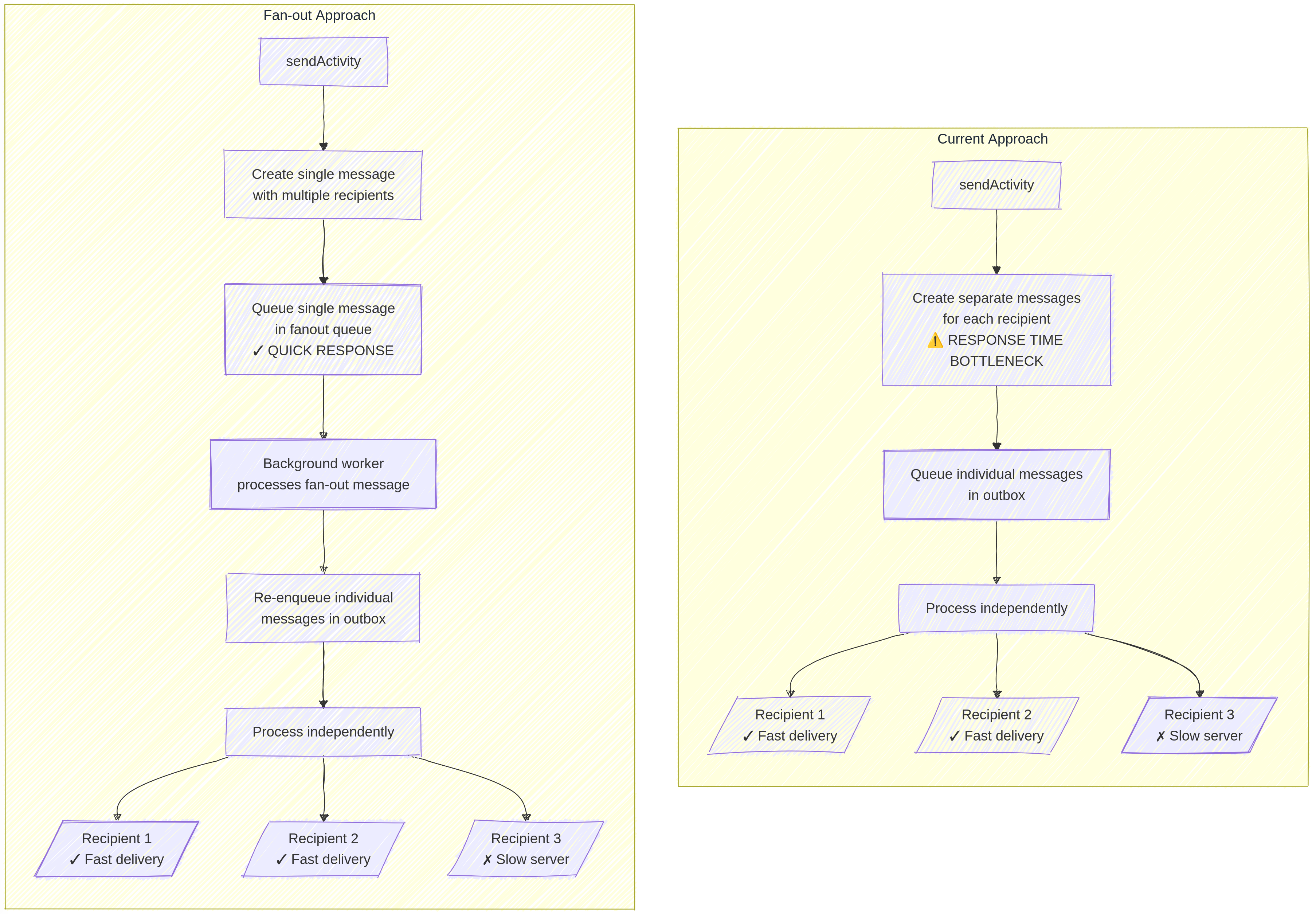

As we discussed in our previous post, Fedify currently creates separate queue messages for each recipient. While this approach offers excellent reliability and individual retry capabilities, it causes performance issues when sending activities to thousands of followers.

Our solution? A new two-stage “fan-out” approach:

- When you call

Context.sendActivity(), we'll now enqueue just one consolidated message containing your activity payload and recipient list - A background worker then processes this message and re-enqueues individual delivery tasks

The benefits are substantial:

Context.sendActivity() returns almost instantly, even for massive follower counts- Memory usage is dramatically reduced by avoiding payload duplication

- UI responsiveness improves since web requests complete quickly

- The same reliability for individual deliveries is maintained

For developers with specific needs, we're adding a fanout option with three settings:

"auto" (default): Uses fanout for large recipient lists, direct delivery for small ones"skip": Bypasses fanout when you need different payload per recipient"force": Always uses fanout even with few recipients

// Example with custom fanout setting

await ctx.sendActivity(

{ identifier: "alice" },

recipients,

activity,

{ fanout: "skip" } // Directly enqueues individual messages

);

This change represents months of performance testing and should make Fedify work beautifully even for extremely popular accounts!

For more details, check out our docs.

What other #performance optimizations would you like to see in future Fedify releases?

#ActivityPub #fedidev

くろでん

くろでん

しゅいろ

しゅいろ