"빠른 RAG"가 아니라 "내 데이터를 내가 소유하는 RAG"를 만들고 싶었습니다.

기술과 프레임워크를 만드는 과정은 결코 쉽지 않습니다. 실제 현장의 피드백을 듣고 방향을 잡아가는 일이 때로는 힘들지만, 꼭 거쳐가야 할 관문이겠죠.

너도 나도 빠르게 돈을 태워 RAG를 구축해가는 상황 속에서, 빈자의 RAG, 정제된 RAG, 통제 가능한 RAG를 만들어보고 싶다는 생각으로 출발한 아이디어를 계속 다듬어 나가고 있습니다.

"빠른 RAG"가 아니라 "내 데이터를 내가 소유하는 RAG"를 만들고 싶었습니다.

기술과 프레임워크를 만드는 과정은 결코 쉽지 않습니다. 실제 현장의 피드백을 듣고 방향을 잡아가는 일이 때로는 힘들지만, 꼭 거쳐가야 할 관문이겠죠.

너도 나도 빠르게 돈을 태워 RAG를 구축해가는 상황 속에서, 빈자의 RAG, 정제된 RAG, 통제 가능한 RAG를 만들어보고 싶다는 생각으로 출발한 아이디어를 계속 다듬어 나가고 있습니다.

Pinterest: Hunderte Entlassungen sollen Ressourcen für KI freisetzen

Pinterest plant, bis zu fünfzehn Prozent der Belegschaft zu entlassen. Das soll mehr Ressourcen für die Entwicklung von KI-Funktionen und -Produkten freisetzen.

#Entertainment #GenerativeAI #Internet #IT #KünstlicheIntelligenz #Mobiles #SocialMedia #Wirtschaft #news

So, now they know how real creators feel after having been ripped off by "AI"…

https://futurism.com/artificial-intelligence/ai-prompt-plagiarism-art

#tech #technology #BigTech #AI #ArtificialIntelligence #LLM #LLMs #MachineLearning #GenAI #generativeAI #AISlop #Meta #Google #NVIDIA #gemini #OpenAI #ChatGPT #anthropic #claude

Imagine the FSF was developing a hypothetical software license under the branding of GPLv4 that dealt with the rise of LLMs. Which of the following copyleft features would appeal? #floss #linux #fsf #FreeSoftware #stochasticParrots #llm #eliza #ai #generativeAI #Programming #copyleft

I find the idea of AI generated code most acceptable / least offensive in the following kinds of software.

Anybody with an opinion is welcome to vote. Boosts welcome. #llm #stochasticParrots #ai #generativeAI #eliza #floss #programming #gamedev

![]() @b0rkJulia Evans This is definitely not too big of a question, but it's a serious one! We too worry about free software culture, which I agree can be dogmatic and rigid. We at #SFC have worked hard to keep ourselves focused on our ethics and principles, while also acknowledging that we live in a complex world.

@b0rkJulia Evans This is definitely not too big of a question, but it's a serious one! We too worry about free software culture, which I agree can be dogmatic and rigid. We at #SFC have worked hard to keep ourselves focused on our ethics and principles, while also acknowledging that we live in a complex world.

We've acknowledged how difficult it is to live in a purely #freesoftware world. You can see the keynotes ![]() @bkuhnBradley M. Kuhn and I gave over the years, here's one from #FOSDEM:

@bkuhnBradley M. Kuhn and I gave over the years, here's one from #FOSDEM:

https://archive.fosdem.org/2019/schedule/event/full_software_freedom/

![]() @b0rkJulia Evans We strive to stay open to new technology and new ways of doing things, while remaining focused on how things could and should be. You can see the aspirational statement we made about #generativeAI:

@b0rkJulia Evans We strive to stay open to new technology and new ways of doing things, while remaining focused on how things could and should be. You can see the aspirational statement we made about #generativeAI:

https://sfconservancy.org/news/2024/oct/25/aspirational-on-llm-generative-ai-programming/

We seek to frame things positively and meet people where they are while not shying away from difficult conversations

We choose to personally inconvenience ourselves so others experience freedom, even if it means we ourselves have to use more proprietary software. #sfc

That's Late Stage Capitalism for you:

"More than 20% of the videos that YouTube’s algorithm shows to new users are “AI slop” – low-quality AI-generated content designed to farm views, research has found.

The video-editing company Kapwing surveyed 15,000 of the world’s most popular YouTube channels – the top 100 in every country – and found that 278 of them contain only AI slop.

Together, these AI slop channels have amassed more than 63bn views and 221 million subscribers, generating about $117m (£90m) in revenue each year, according to estimates.

The researchers also made a new YouTube account and found that 104 of the first 500 videos recommended to its feed were AI slop. One-third of the 500 videos were “brainrot”, a category that includes AI slop and other low-quality content made to monetise attention.

The findings are a snapshot of a rapidly expanding industry that is saturating big social media platforms – from X to Meta to YouTube – and defining a new era of content: decontextualised, addictive and international.

A Guardian analysis this year found that nearly 10% of YouTube’s fastest-growing channels were AI slop, racking up millions of views despite the platform’s efforts to curb “inauthentic content”."

Select all statements you agree with. Specifically curious about respondents from the #floss crowd, but everyone with an opinion should participate. Boosts welcome for larger sample size. #llm #stochasticParrots #ai #generativeAI #eliza #programming

I published a personal recap of State of the Word 2025, with a focus on the AI panel I joined with Mary Hubbard, Matt Mullenweg, Felix Arntz, and James LePage.

I also wrote about the work behind the AI Experiments plugin and what it means for the future of AI in WordPress.

It's sad and shocking to see everyone losing their mind in real time.

There are some glaring obvious issues to be discussed when it comes to #GenerativeAI integration: environmental issues, issues of power and control, of accountability and impacts on society.

None of which are addressed when #WordPress is discussing #AI.

We lost #Mozilla, we are losing #WordPress, so a bunch of libertarians and their investors can wreck what's left of the western world. Sad times.

このChrome「拡張機能」を今すぐ削除せよ、生成AIとの会話を収集し第三者に販売 | Forbes JAPAN 公式サイト

https://forbesjapan.com/articles/detail/87234

『・Urban VPN Proxy

・1ClickVPN Proxy for Chrome

・Urban Browser Guard

・Urban Ad Blocker』

『インストール後に、自動アップデートすることで会話を傍受・取得する機能を追加』

My piece with Nate Fast in HBR on generative AI and productivity is out today! I'm really proud of this piece, and I'm hoping it moves the conversation forward in this vital area. Also we violate Betteridge's law with this headline 😁

https://hbr.org/2024/01/is-genais-impact-on-productivity-overblown?ab=HP-hero-featured-text-1

"Datacenters in space are a terrible, horrible, no good idea."

https://taranis.ie/datacenters-in-space-are-a-terrible-horrible-no-good-idea/

#tech #technology #BigTech #space #satellites #data #server #computing #CloudComputing #AI #ArtificialIntelligence #LLM #LLMs #MachineLearning #GenAI #generativeAI #AISlop #Meta #Google #OpenAI #ChatGPT

All mentions of "AI" should be changed into "Brain Rot Inducer"…

"Do you want the Brain Rot Inducer to summarize this text for you?"

"The Brain Rot Inducer can auto-answer this e-mail for you!"

"Let the Brain Rot Inducer write this social media post for you!"

"Easily generate an image with the Brain Rot Inducer, and call it your own creation!"

#AI #ArtificialIntelligence #LLM #LLMs #MachineLearning #tech #technology #BigTech #GenAI #generativeAI #AISlop #Meta #Google #OpenAI #ChatGPT

"AI chatbots have conquered the world, so it was only a matter of time before companies started stuffing them into toys for children, even as questions swirled over the tech’s safety and the alarming effects they can have on users’ mental health.

Now, new research shows exactly how this fusion of kid’s toys and loquacious AI models can go horrifically wrong in the real world.

After testing three different toys powered by AI, researchers from the US Public Interest Research Group found that the playthings can easily verge into risky conversational territory for children, including telling them where to find knives in a kitchen and how to start a fire with matches. One of the AI toys even engaged in explicit discussions, offering extensive advice on sex positions and fetishes.

In the resulting report, the researchers warn that the integration of AI into toys opens up entire new avenues of risk that we’re barely beginning to scratch the surface of — and just in time for the winter holidays, when huge numbers of parents and other relatives are going to be buying presents for kids online without considering the novel safety issues involved in exposing children to AI."

인공지능 (AI) 발전과 신뢰 기반 조성 등에 관한 기본법' (약칭: AI 기본법)

* 2026년 1월 22일 시행.

* AI 사업자는 이용자에게 서비스가 AI 기술을 사용한다는 사실을 고지해야 한다. (예: "이 챗봇은 AI입니다.")

* AI 사업자는 생성형 AI를 활용해 만든 컨텐트의 경우, AI에 의해 생성되었음을 명확히 표시해야 한다.

* 위와 같은 '고지 및 표시 의무'를 위반할 경우, 3천만 원 이하의 과태료가 부과될 수 있다.

* 국내에 주소나 영업소가 없고 일정 기준을 충족한 해외 AI 사업자는 AI 관련 업무를 처리할 국내 대리인을 의무적으로 지정해야 한다.

"Cybersecurity researchers have disclosed a new set of vulnerabilities impacting OpenAI's ChatGPT artificial intelligence (AI) chatbot that could be exploited by an attacker to steal personal information from users' memories and chat histories without their knowledge.

The seven vulnerabilities and attack techniques, according to Tenable, were found in OpenAI's GPT-4o and GPT-5 models. OpenAI has since addressed some of them.

These issues expose the AI system to indirect prompt injection attacks, allowing an attacker to manipulate the expected behavior of a large language model (LLM) and trick it into performing unintended or malicious actions, security researchers Moshe Bernstein and Liv Matan said in a report shared with The Hacker News."

https://thehackernews.com/2025/11/researchers-find-chatgpt.html

"To be clear, everybody is losing money on AI. Every single startup, every single hyperscaler, everybody who isn’t selling GPUs or servers with GPUs inside them is losing money on AI. No matter how many headlines or analyst emissions you consume, the reality is that big tech has sunk over half a trillion dollars into this bullshit for two or three years, and they are only losing money.

So, at what point does all of this become worth it?

Actually, let me reframe the question: how does any of this become worthwhile?Today, I’m going to try and answer the question, and have ultimately come to a brutal conclusion: due to the onerous costs of building data centers, buying GPUs and running AI services, big tech has to add $2 Trillion in AI revenue in the next four years. Honestly, I think they might need more.

No, really. Big tech has already spent $605 billion in capital expenditures since 2023, with a chunk of that dedicated to 5-year-old (A100) and 4-year-old (H100) GPUs, and the rest dedicated to buying Blackwell chips that The Information reports have gross margins of negative 100%:"

https://www.wheresyoured.at/big-tech-2tr/

#AI #GenerativeAI #OpenAI #Nvidia #AIBubble #Economy #Economics #BigTech

The AI industry wants us to believe AI superintelligence is the real threat from generative AI.

But that narrative was crafted to distract from the many ways genAI is being used to tear our societies apart, as we saw this week when a deepfake video rocked the Irish election. It must be reined in.

https://disconnect.blog/generative-ai-is-a-societal-disaster/

KI-Müll in US-Urteilen aufgeflogen

KI-Müll in Gerichtseingaben ist eine Plage. Nun sind auch Entscheidungen zweier US-Gerichte aufgeflogen. Ein Praktikant sei schuld, sagt ein erwischter Richter.

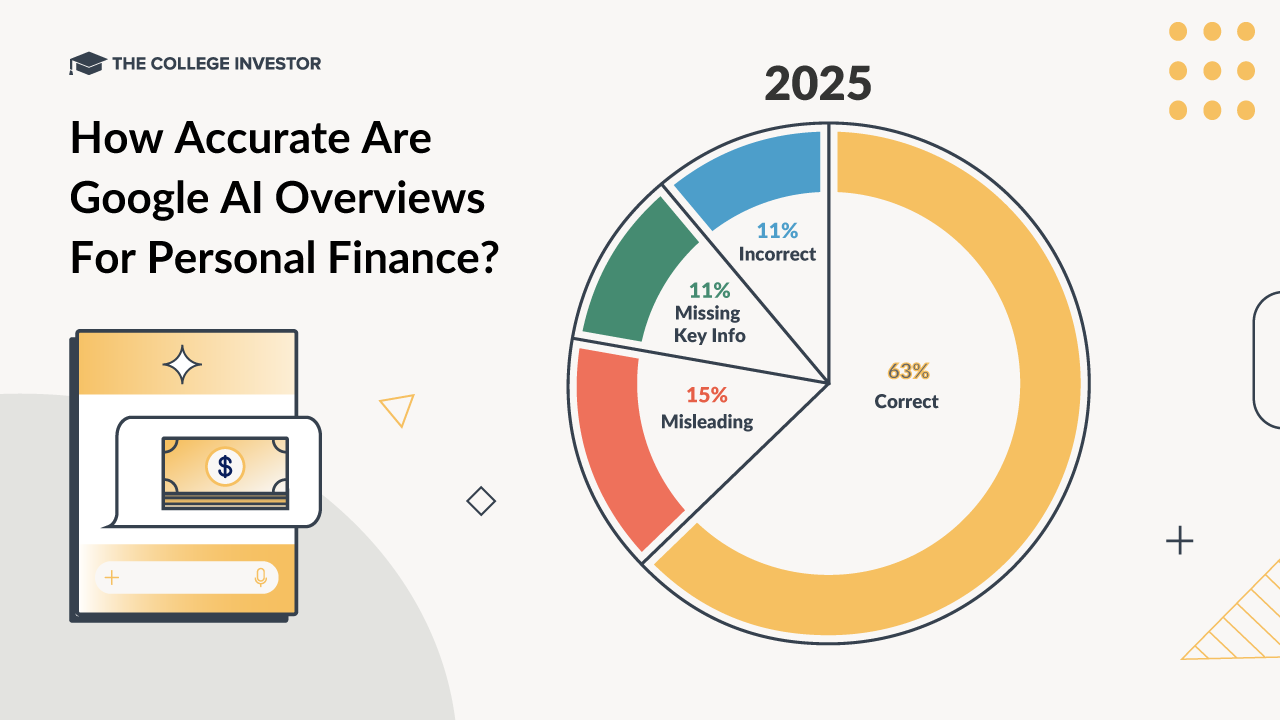

"Google AI overviews are misleading or inaccurate in 37% of finance-related searches, according to The College Investor's latest analysis. This is an improvement from last year, where 43% of AI Overviews were inaccurate - but a one-third error rate is troubling when it comes to personal finance.

This is causing consumer confusion, and potentially harming Americans' finances. The overviews were especially bad when it comes to tax, insurance, and financial aid related queries.

What's Happening: Over the last several years, Google has been rolling out AI-driven answers in search results. At the top of the search results they show AI Overviews, and they're now expanding the use of AI Mode. The problem is they are plagued with inaccurate answers. And experts say it's a serious issue."

https://thecollegeinvestor.com/66208/37-of-google-ai-finance-answers-are-inaccurate-in-2025/

We Asked Google Search 100 Money Questions - Here’s What It Got Wrong

Google’s AI Overviews still struggles with personal finance topics, from student loans to taxes, according to new research.

thecollegeinvestor.com · The College Investor

Link author: ![]() The College Investor@thecollegeinvestor@mastodon.social

The College Investor@thecollegeinvestor@mastodon.social

Sora 2がもたらすステマ天国、書籍の表紙画像と簡単なプロンプトで新刊PRのステマ動画があっという間に完成 【生成AI事件簿】氾濫する違法マーケティング、私たちは高度な動画生成AIの悪用を防ぐことはできるのか?(1/5) | JBpress (ジェイビープレス)

https://jbpress.ismedia.jp/articles/-/91018

『OpenAIが新たな動画生成AI「Sora 2」を公開したが、同社は「公人の描写をデフォルトでブロックする」と明言している。しかし、この制限には大きな抜け穴があり、「すでに亡くなっている公人の映像生成は許可されている」ことが判明。その結果として、SNS上では故人を自由にAI動画で再現する例が続々と登場している』

OpenAIのSora 2、「マイケル・ジャクソンがコメディ」など故人の動画を続々作成 | Gadget Gate

https://gadget.phileweb.com/post-111135/

Sora 2がもたらすステマ天国、書籍の表紙画像と簡単なプロンプトで新刊PRのステマ動画があっという間に完成 【生成AI事件簿】氾濫する違法マーケティング、私たちは高度な動画生成AIの悪用を防ぐことはできるのか?(1/5) | JBpress (ジェイビープレス)

https://jbpress.ismedia.jp/articles/-/91018

If your business or industry wouldn’t exist without exploitation, then quite simply, your business or industry shouldn’t exist.

https://www.theverge.com/news/674366/nick-clegg-uk-ai-artists-policy-letter

Outre la douleur et le dégoût bien compréhensibles exprimés ici par Zelda Williams (fille de Robin Williams), je trouve sa définition de l'IA générative particulièrement bien trouvée :

"Human Centipede of Content".

Sora 2で生成の動画、別SNSに“AI素性隠して”大量投稿し再生数荒稼ぎ ウォーターマークを消すツールとアルトマン氏の著作権への対応(生成AIクローズアップ) | テクノエッジ TechnoEdge

https://www.techno-edge.net/article/2025/10/06/4639.html

『本来、Sora 2で生成された動画にはウォーターマークが自動的に付与される仕組みになっています。このマークは、コンテンツがAI生成であることを明示し、透明性を確保するための機能です』

『一方で、Sora 2のウォーターマークを除去するWebサービスが早くも登場しています。試してみましたが、ブラウザ上で数分のうちに除去が完了しました』

『現在のSora 2はアニメキャラクターなどの著作権に引っかかるであろう二次創作も可能にしています。つまり非実在だけでなく、既存キャラクターや人物の動画も生成可能であり、投稿もあっさりできる状況です。このような状況の中で、SNSプラットフォーム側も対応を検討していると考えられます』

Excellent article that provides a good summary of all my thoughts on AI: the problem is not in taking our jobs, but in the systematic erosion of our ability to think and focus.

"A recent report by content delivery platform company Fastly found that at least 95% of the nearly 800 developers it surveyed said they spend extra time fixing AI-generated code, with the load of such verification falling most heavily on the shoulders of senior developers.

These experienced coders have discovered issues with AI-generated code ranging from hallucinating package names to deleting important information and security risks. Left unchecked, AI code can leave a product far more buggy than what humans would produce.

Working with AI-generated code has become such a problem that it’s given rise to a new corporate coding job known as “vibe code cleanup specialist.”

TechCrunch spoke to experienced coders about their time using AI-generated code about what they see as the future of vibe coding. Thoughts varied, but one thing remained certain: The technology still has a long way to go.

“Using a coding co-pilot is kind of like giving a coffee pot to a smart six-year-old and saying, ‘Please take this into the dining room and pour coffee for the family,’” Rover said.

Can they do it? Possibly. Could they fail? Definitely. And most likely, if they do fail, they aren’t going to tell you. “It doesn’t make the kid less clever,” she continued. “It just means you can’t delegate [a task] like that completely.”"

#AI #GenerativeAI #VibeCoding #SoftwareDevelopment #Programming #AIBabysitters

#GenerativeAI, #FoundationModels, #LLMs, and all of that hokey nonsense shall not appear in my #robotics roadmaps as anything other than a neat research item until it can demonstrate a feasible path to #FunctionalSafety or mathematical completeness.

I lead #Product on the largest mobile-#robotic fleet known to humankind. I will not entrust decisions that could maim or kill to a pile of nondeterminate math prone to “hallucinations” or confabulation.

The Futzing Fraction - At least some of your time with genAI will be spent just kind of… futzing with it.

Diffusion models are a kabbalistic miracle of math. At the core, they’re just incredibly advanced denoising systems, formally known as Denoising Diffusion Probabilistic Models (DDPMs), e.g. Stable Diffusion and DALLE-2.

During training, the model is shown hundreds of millions of images paired with text descriptions. To teach it how to "clean up" noisy images, we intentionally add random noise to each training image. The model’s job is to learn how to reverse it using the text prompt as a guide for where and how to remove the noise.

When you generate an image, the model performs this process in reverse. It starts with a latent space of pure random noise and gradually subtracts more and more noise with each diffusion step. It's synthesizing an image from scratch by removing all of the noise until the image remains, organizing the chaos into whatever you asked it to generate.

![]() @cadeyXe

@cadeyXe

Funnily, the people who are trying to guess which text descriptions had been used during the training phase, call themselves "prompt engineers" instead of "guessers of the lost description".

Introducing study mode - A new way to learn in ChatGPT that offers step by step guidance instead of quick answers.

Intro Post: Obvs #politics #ScottishPolitics #ScottishIndependence

All things #Ukraine

I’m also interested in #ArtificialIntelligence #AI #GenerativeAI & its potential impact on politics

My first proper word was ‘book’ so expect #Books #Reading - and #ScienceFiction #SciFi because I’ve been hooked since the days of Outer Limits & the Twilight Zone

All things #StarTrek

#Catholic stuff - #Cats & some other beasties - & #DuolingoSpanish #DuolingoItalian & er #DuolingoKlingon 😂🖖🖖

It has been know for a long time that, when it comes to AI training, quality is much more important than quantity. Yet Big Tech goes for quantity because it is cheaper and takes less time to train a model on non-curated garbage scrapped from the internet than taking the time and the (human) effort of curating the data. That is one of the problems with their models: Garbage in, garbage out.

#AI #GenAI #generativeAI #BigTech

Thread 1/3

Wie ins Meer pinkeln: KI vergiften helfe nicht

Der Versuch, Daten zu vergiften, helfe so wenig, wie ins Meer zu pinkeln – es bleibe ein Meer. Das sagt der Anbieter einer Schutz-Software.

AI Repo of the Week: Generative AI for Beginners with JavaScript | by Dan Wahlin.

https://blog.codewithdan.com/ai-repo-of-the-week-generative-ai-for-beginners-with-javascript/

It's official! We've launched a toolkit to help teachers and students adapt classes to the sudden rise of generative AI. Browse recommended policies, watch tutorials, and learn strategies for integrating—or excluding—text- and media-generators in your classes.

https://umaine.edu/learnwithai

#EdChatme #EdChat #EdTech #STEMEducation #HigherEd #Edutooters #AcademicMastodon #OpenAI #ChatGPT #DALLE #GenerativeAI #AIimages #AIethics #GenerativeAIArt #DeepLearning #MachineLearning #llm #plagiarism

Leveraging the power of NPU to run Gen AI tasks on Copilot+ PCs.

Readings shared May 30, 2025. https://jaalonso.github.io/vestigium/posts/2025/05/30-readings_shared_05-30-25 #AIforCode #Agda #GenerativeAI #ITP #LeanProver #Math #Rocq

I just put up a fresh post about unproductive criticism surrounding artificial intelligence with an attempt to advocate for more nuanced, constructive engagement from both sides of the discussion.

If all the genAI tools are as great and beloved as the corps would want us to believe, there would be no reason to force feed it to us.

#SteveJobs said computers are "bicycles for the mind." Bicycles use geometry & physics to help one go further under the same amount of effort they themselves put in.

I feel #GenerativeAI are "escalators for the mind". Escalators are expensive, complex, inefficient machines that churn constantly whether used or not. A convenience. They _are_ a helpful assistive device for those that need it — and many do! — and you _can_ to go further, faster, if putting in effort, but _most_ use for laziness.

I'm starting to really like 'vibe coding'...

I've now had several clients come to me to take over projects that another agency was working on because the other agency started using generative AI. Apparently there was a real drop in product quality. I wonder why.

Basically vibe coding is making me money specifically because I'm not using it.

#Business #Debates

Is ‘ethical AI’ an oxymoron? · The unpleasant side effects of generative AI https://ilo.im/163agd

_____

#AI #GenerativeAI #Sustainability #Ethics #Design #ProductDesign #UiDesign #WebDesign #Development #WebDev

Readings shared April 23, 2025. https://jaalonso.github.io/vestigium/posts/2025/04/23-readings_shared_04-23-25 #CommonLisp #Education #GenerativeAI #Haskell #IsabelleHOL #LeanProver #Math #Python

Bullshit universities: the future of automated education. ~ Robert Sparrow, Gene Flenady. https://link.springer.com/article/10.1007/s00146-025-02340-8 #GenerativeAI #Education

2025 AIpub.social theme update 😍

Looking for an instance dedicated to the exploration of artificial intelligence? Consider creating an account or migrating your existing account here!

We also have a Discord server home to over 600 members, you can find it here: https://discord.gg/DBvh2vPbzR

Want to support AIpub.social? Best way to do that now will be by getting yourself 20% off your first year subscription to ThinkDiffusion Pro using this affiliate link:

https://www.thinkdiffusion.com/?via=AIpub

In addition to 20% of the first year subscription cost, you will also get an additional 20% more credits on your first deposit.

These commissions will help me cover server and storage costs for this instance, but also provide you with the following perks, see thread >>

#introduction It was on my todo list for a long time and now I'm #newhere - writing in En and De, darum auch #neuhier

Interested in #Switzerland #swisspolitics #education #foss #digitalsustainability #gardening #SchweizerPolitik #Bildung #DigitaleNachhaltigkeit #gärtnern #AI #generativeAI #fediverse

I found some active accounts from organisations but it seems to be harder to find accounts representing persons.

Can you recommend me any accounts from Switzerland or accounts focussing on AI? :)

Now starting at ICT.OPEN: a panel discussion on the Future of Education in the Era of Generative AI. As a freshly-minted assistant professor with increasingly many teaching responsibilities, I am keen!

https://ictopen.nl/programme/the-future-of-education-in-the-era-of-generative-ai

#ICTOpen

#ICTOpen2025

#NWO

#Conference

#AcademicMastodon

#AcademicChatter

#ICT

#Research

#Networking

#Utrecht

#Jaarbeurs

#ComputerScience

#Education

#GenerativeAI

#AI

Do you use AI?